Example 3: ImageNet with keras

Source:vignettes/articles/Example_3_imagenet.Rmd

Example_3_imagenet.RmdThis is a rather short article, just to illustrate the use of the innsight package on a few images of the ImageNet dataset and pre-trained keras models. For more detailed information about the package and the implemented methods we refer to this article and for simpler but in detailed explained examples we kindly recommend to Example 1 and Example 2.

In this example, we want to apply the innsight package on pre-trained models on the ImageNet dataset using keras. This dataset is a classification problem of images in classes containing over images per class. We have selected examples of a few classes each and will analyze them with respect to different networks in the following.

Preprocess images

The original images all have different sizes and the pre-trained models all require an input size of and the channels are zero-centered according to the channel mean of the whole dataset; hence, we need to pre-process the images accordingly, which we will do in the following steps:

# Load required packages

library(keras)

#> The keras package is deprecated. Please use the keras3 package instead.

#> Alternatively, to continue using legacy keras, call `py_require_legacy_keras()`.

library(innsight)

library(torch)

# Set seeds for reproducibility

set.seed(123)

torch_manual_seed(123)

tensorflow::set_random_seed(123)

# Load images

img_files <- paste0("images/", c("image_1.png", "image_2.png", "image_3.png", "image_4.png"))

images <- k_stack(lapply(img_files,

function(path) image_to_array(image_load(path, target_size = c(224, 224)))))

# now 'images' is a batch of 4 images of equal size 224x224x3

dim(images)

#> [1] 4 224 224 3

# preprocess images matching the conditions of the pre-trained models

images <- imagenet_preprocess_input(images)Method configuration

Besides the images, we also need the labels of the

classes, which we get via a trick with the

imagenet_decode_predictions() function:

# get class labels

res <- imagenet_decode_predictions(array(1:1000, dim = c(1,1000)), top = 1000)[[1]]

#> Downloading data from https://storage.googleapis.com/download.tensorflow.org/data/imagenet_class_index.json

#> 8192/35363 [=====>........................] - ETA: 0s35363/35363 [==============================] - 0s 0us/step

imagenet_labels <- res$class_description[order(res$class_name)]Last but not least, we define the configurations for the methods we want to apply to the images and the models. This is a list that contains the method call, method name and the corresponding method arguments. For more information to the methods and the method-specific arguments, we refer to the in-depth vignette.

config <- list(

list(

method = Gradient$new,

method_name = "Gradient",

method_args = list()),

list(

method = SmoothGrad$new,

method_name = "SmoothGrad",

method_args = list(n = 10)),

list(

method = Gradient$new,

method_name = "Gradient x Input",

method_args = list(times_input = TRUE)),

list(

method = LRP$new,

method_name = "LRP (alpha_beta)",

method_args = list(

rule_name = list(BatchNorm_Layer = "pass", Conv2D_Layer = "alpha_beta",

MaxPool2D_Layer = "alpha_beta", Dense_Layer = "alpha_beta",

AvgPool2D_Layer = "alpha_beta"),

rule_param = 1)),

list(

method = LRP$new,

method_name = "LRP (composite)",

method_args = list(

rule_name = list(BatchNorm_Layer = "pass", Conv2D_Layer = "alpha_beta",

MaxPool2D_Layer = "epsilon", AvgPool2D_Layer = "alpha_beta"),

rule_param = list(Conv2D_Layer = 0.5, AvgPool2D_Layer = 0.5,

MaxPool2D_Layer = 0.001))),

list(

method = DeepLift$new,

method_name = "DeepLift (rescale zeros)",

method_args = list()),

list(

method = DeepLift$new,

method_name = "DeepLift (reveal-cancel mean)",

method_args = list(rule_name = "reveal_cancel", x_ref = "mean"))

)In order to keep this article clear, we define a few utility functions below, which will be used later on.

Utility functions

# Function for getting the method arguments

get_method_args <- function(conf, converter, data, output_idx) {

args <- conf$method_args

args$converter <- converter

args$data <- data

args$output_idx <- output_idx

args$channels_first <- FALSE

args$verbose <- FALSE

# for DeepLift use the channel mean

if (!is.null(args$x_ref)) {

mean <- array(apply(as.array(args$data), c(1, 4), mean), dim = c(1,1,1,3))

sd <- array(apply(as.array(args$data), c(1, 4), sd), dim = c(1,1,1,3))

args$x_ref <- torch::torch_randn(c(1,224,224,3)) * sd + mean

}

args

}

apply_innsight <- function(method_conf, pred_df, FUN) {

lapply(seq_len(nrow(pred_df)), # For each image...

function(i) {

do.call(rbind, args = lapply(method_conf, FUN, i = i)) # and each method...

})

}

add_original_images <- function(img_files, gg_plot, num_methods) {

library(png)

img_pngs <- lapply(img_files,

function(path) image_to_array(image_load(path, target_size = c(224, 224))) / 255)

gl <- lapply(img_pngs, grid::rasterGrob)

gl <- append(gl, list(gg_plot))

num_images <- length(img_files)

layout_matrix <- matrix(c(seq_len(num_images),

rep(num_images + 1, num_images * num_methods)),

nrow = num_images)

list(grobs = gl, layout_matrix = layout_matrix)

}Pre-trained model VGG19

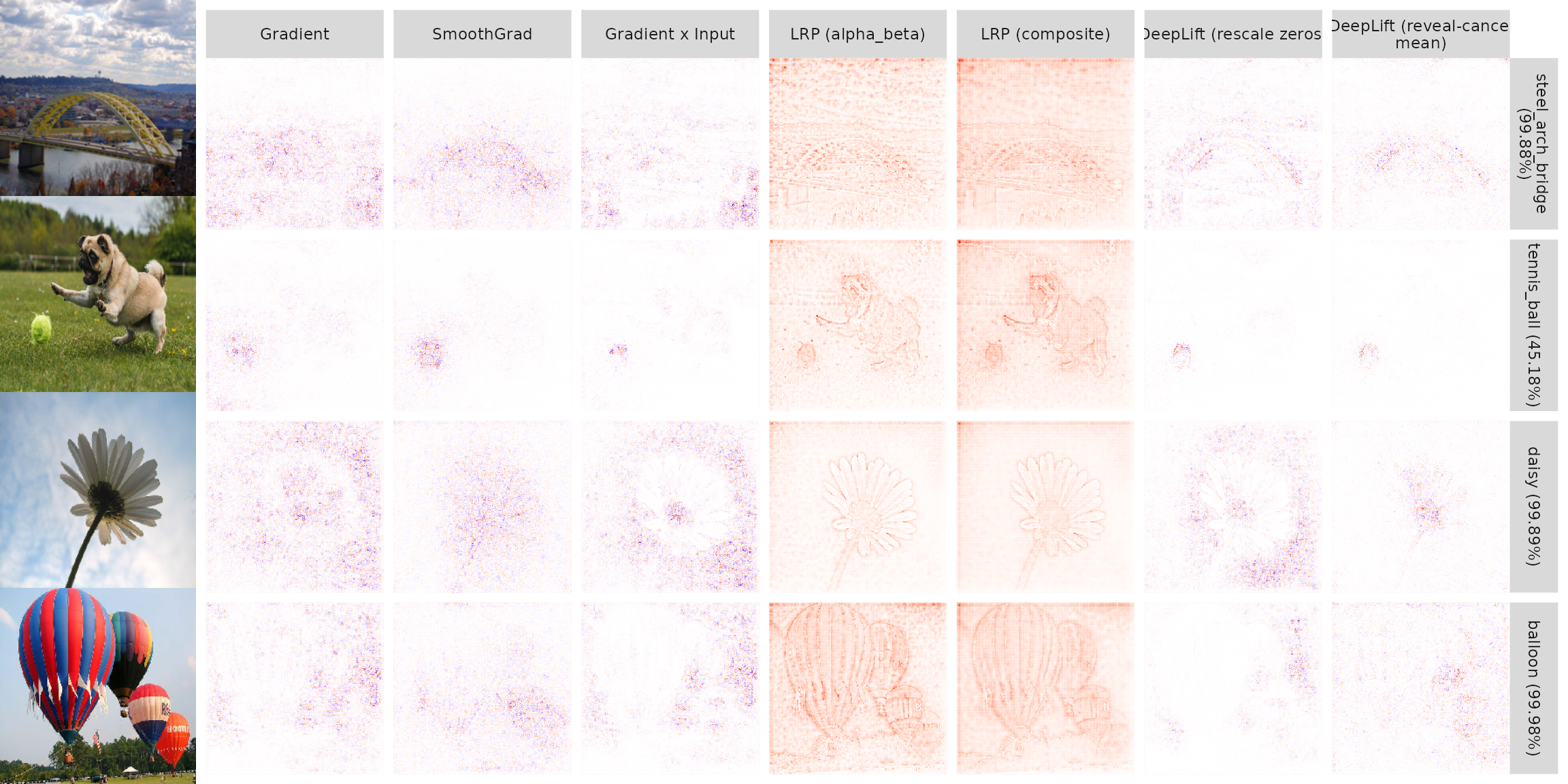

Now let’s analyze the individual images according to the class that

the model VGG19 (see ?application_vgg19 for details)

predicts for them. In the innsight package, these

output classes have to be chosen by ourselves because a calculation for

all

classes would be too computationally expensive. For this reason, we

first determine the corresponding predictions from the model:

# Load the model

model <- application_vgg19(include_top = TRUE, weights = "imagenet")

#> Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/vgg19/vgg19_weights_tf_dim_ordering_tf_kernels.h5

#> 8192/574710816 [..............................] - ETA: 0s 409600/574710816 [..............................] - ETA: 1:11 6037504/574710816 [..............................] - ETA: 14s 12328960/574710816 [..............................] - ETA: 9s 20717568/574710816 [>.............................] - ETA: 7s 29106176/574710816 [>.............................] - ETA: 6s 34332672/574710816 [>.............................] - ETA: 6s 43196416/574710816 [=>............................] - ETA: 5s 45883392/574710816 [=>............................] - ETA: 5s 58466304/574710816 [==>...........................] - ETA: 5s 61882368/574710816 [==>...........................] - ETA: 5s 73146368/574710816 [==>...........................] - ETA: 4s 87482368/574710816 [===>..........................] - ETA: 4s100409344/574710816 [====>.........................] - ETA: 3s109477888/574710816 [====>.........................] - ETA: 3s119283712/574710816 [=====>........................] - ETA: 3s137699328/574710816 [======>.......................] - ETA: 3s148955136/574710816 [======>.......................] - ETA: 2s160169984/574710816 [=======>......................] - ETA: 2s169705472/574710816 [=======>......................] - ETA: 2s180707328/574710816 [========>.....................] - ETA: 2s200728576/574710816 [=========>....................] - ETA: 2s219504640/574710816 [==========>...................] - ETA: 2s228335616/574710816 [==========>...................] - ETA: 2s243015680/574710816 [===========>..................] - ETA: 1s262922240/574710816 [============>.................] - ETA: 1s268328960/574710816 [=============>................] - ETA: 1s288161792/574710816 [==============>...............] - ETA: 1s304668672/574710816 [==============>...............] - ETA: 1s319545344/574710816 [===============>..............] - ETA: 1s326901760/574710816 [================>.............] - ETA: 1s346513408/574710816 [=================>............] - ETA: 1s361218048/574710816 [=================>............] - ETA: 1s381198336/574710816 [==================>...........] - ETA: 1s401137664/574710816 [===================>..........] - ETA: 0s404496384/574710816 [====================>.........] - ETA: 0s424501248/574710816 [=====================>........] - ETA: 0s444342272/574710816 [======================>.......] - ETA: 0s462839808/574710816 [=======================>......] - ETA: 0s482689024/574710816 [========================>.....] - ETA: 0s497197056/574710816 [========================>.....] - ETA: 0s500965376/574710816 [=========================>....] - ETA: 0s507256832/574710816 [=========================>....] - ETA: 0s527212544/574710816 [==========================>...] - ETA: 0s547045376/574710816 [===========================>..] - ETA: 0s566878208/574710816 [============================>.] - ETA: 0s574710816/574710816 [==============================] - 3s 0us/step

# get predictions

pred <- predict(model, images)

#> 1/1 - 6s - 6s/epoch - 6s/step

pred_df <- imagenet_decode_predictions(pred, top = 1)

# store the top prediction with the class label in a data.frame

pred_df <- do.call(rbind, args = lapply(pred_df, function(x) x[1, ]))

# add the model output index as a column

pred_df <- cbind(pred_df, index = apply(pred, 1, which.max))

# show the summary of the output predictions

pred_df

#> class_name class_description score index

#> 1 n04311004 steel_arch_bridge 0.7572445 822

#> 2 n02108422 bull_mastiff 0.4107323 244

#> 3 n11939491 daisy 0.2913894 986

#> 4 n02782093 balloon 0.9997583 418Afterward, we apply all the methods from the configuration

config to the model by first putting it into a

Converter object and then applying the methods to each

image individually.

# Step 1: Convert the model ----------------------------------------------------

converter <- Converter$new(model, output_names = imagenet_labels)

FUN <- function(conf, i) {

# Get method args and add the converter, data, output index

# channels first and verbose arguments

args <- get_method_args(conf, converter, images[i,,,, drop = FALSE],

pred_df$index[i])

# Step 2: Apply method ------------------------------------------------------

method <- do.call(conf$method, args = args)

# Step 3: Get the result as a data.frame ------------------------------------

result <- get_result(method, "data.frame")

result$data <- paste0("data_", i)

result$method <- conf$method_name

# Tidy a bit..

rm(method)

gc()

result

}

result <- apply_innsight(config, pred_df, FUN)

# Combine results and transform into data.table

library(data.table)

result <- data.table(do.call(rbind, result))After the results have been generated and summarized in a

data.table, they can be visualized using

ggplot2:

library(ggplot2)

# First, we take the channels mean

result <- result[, .(value = mean(value)),

by = c("data", "feature", "feature_2", "output_node", "method")]

# Now, we normalize the relevance values for each output, data point and method to [-1, 1]

result <- result[, .(value = value / max(abs(value)), feature = feature, feature_2 = feature_2),

by = c("data", "output_node", "method")]

result$method <- factor(result$method, levels = unique(result$method))

# set probabilities

labels <- paste0(pred_df$class_description, " (", round(pred_df$score * 100, 2), "%)")

result$data <- factor(result$data, levels = unique(result$data), labels = labels)

# Create ggplot2 plot

p <- ggplot(result) +

geom_raster(aes(x = as.numeric(feature_2), y = as.numeric(feature), fill= value)) +

scale_fill_gradient2(guide = "none", mid = "white", low = "blue", high = "red") +

facet_grid(rows = vars(data), cols = vars(method),

labeller = labeller(data = label_wrap_gen(), method = label_wrap_gen())) +

scale_y_reverse(expand = c(0,0), breaks = NULL) +

scale_x_continuous(expand = c(0,0), breaks = NULL) +

labs(x = NULL, y = NULL)

# Create column with the original images and show the combined plot

res <- add_original_images(img_files, p, length(unique(result$method)))

gridExtra::grid.arrange(grobs = res$grobs, layout_matrix = res$layout_matrix)

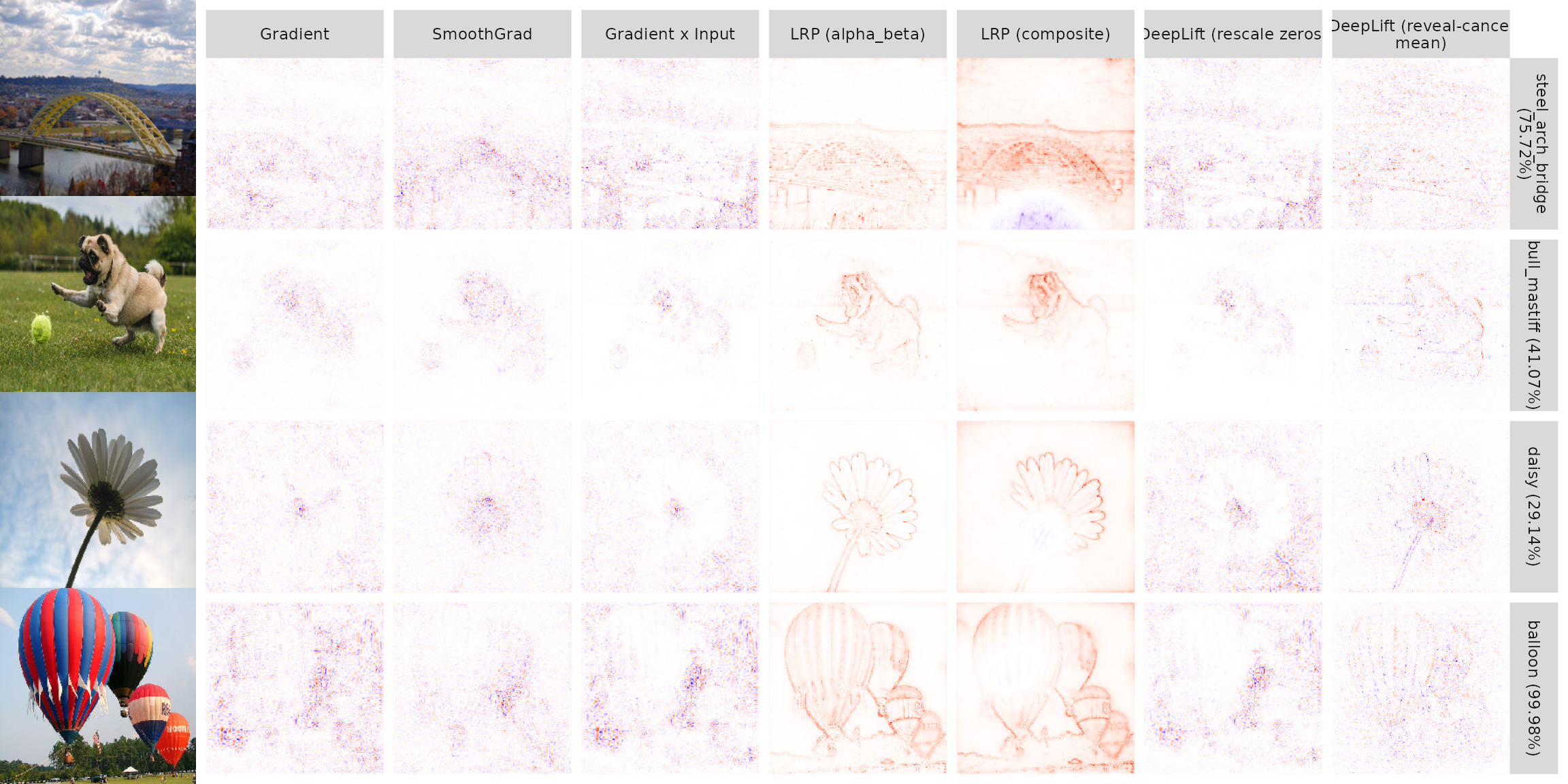

Pre-trained model ResNet50

We can execute these steps to another model analogously:

The exact same steps as in the last section

Load the model ResNet50 (see ?application_resnet50 for

details) and get the predictions:

# Load the model

model <- application_resnet50(include_top = TRUE, weights = "imagenet")

#> Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/resnet/resnet50_weights_tf_dim_ordering_tf_kernels.h5

#> 8192/102967424 [..............................] - ETA: 0s 450560/102967424 [..............................] - ETA: 11s 2367488/102967424 [..............................] - ETA: 6s 9707520/102967424 [=>............................] - ETA: 2s 18096128/102967424 [====>.........................] - ETA: 1s 24387584/102967424 [======>.......................] - ETA: 1s 32776192/102967424 [========>.....................] - ETA: 0s 41164800/102967424 [==========>...................] - ETA: 0s 49553408/102967424 [=============>................] - ETA: 0s 62136320/102967424 [=================>............] - ETA: 0s 67559424/102967424 [==================>...........] - ETA: 0s 78913536/102967424 [=====================>........] - ETA: 0s 85204992/102967424 [=======================>......] - ETA: 0s 97787904/102967424 [===========================>..] - ETA: 0s102967424/102967424 [==============================] - 1s 0us/step

# get predictions

pred <- predict(model, images)

#> 1/1 - 6s - 6s/epoch - 6s/step

pred_df <- imagenet_decode_predictions(pred, top = 1)

# store the top prediction with the class label in a data.frame

pred_df <- do.call(rbind, args = lapply(pred_df, function(x) x[1, ]))

# add the model output index as a column

pred_df <- cbind(pred_df, index = apply(pred, 1, which.max))Apply all methods specified in config to all images:

# Step 1: Convert the model ----------------------------------------------------

converter <- Converter$new(model, output_names = imagenet_labels)

FUN <- function(conf, i) {

# Get method args and add the converter, data, output index

# channels first and verbose arguments

args <- get_method_args(conf, converter, images[i,,,, drop = FALSE],

pred_df$index[i])

# Step 2: Apply method ------------------------------------------------------

method <- do.call(conf$method, args = args)

# Step 3: Get the result as a data.frame ------------------------------------

result <- get_result(method, "data.frame")

result$data <- paste0("data_", i)

result$method <- conf$method_name

# Tidy a bit..

rm(method)

gc()

result

}

result <- apply_innsight(config, pred_df, FUN)

# Combine results and transform into data.table

library(data.table)

result <- data.table(do.call(rbind, result))After the results have been generated and summarized in a

data.table, they can be visualized using

ggplot2:

library(ggplot2)

# First, we take the channels mean

result <- result[, .(value = mean(value)),

by = c("data", "feature", "feature_2", "output_node", "method")]

# Now, we normalize the relevance values for each output, data point and method to [-1, 1]

result <- result[, .(value = value / max(abs(value)), feature = feature, feature_2 = feature_2),

by = c("data", "output_node", "method")]

result$method <- factor(result$method, levels = unique(result$method))

# set probabilities

labels <- paste0(pred_df$class_description, " (", round(pred_df$score * 100, 2), "%)")

result$data <- factor(result$data, levels = unique(result$data), labels = labels)

# Create ggplot2 plot

p <- ggplot(result) +

geom_raster(aes(x = as.numeric(feature_2), y = as.numeric(feature), fill= value)) +

scale_fill_gradient2(guide = "none", mid = "white", low = "blue", high = "red") +

facet_grid(rows = vars(data), cols = vars(method),

labeller = labeller(data = label_wrap_gen(), method = label_wrap_gen())) +

scale_y_reverse(expand = c(0,0), breaks = NULL) +

scale_x_continuous(expand = c(0,0), breaks = NULL) +

labs(x = NULL, y = NULL)

# Create column with the original images and show the combined plot

res <- add_original_images(img_files, p, length(unique(result$method)))Show the result:

gridExtra::grid.arrange(grobs = res$grobs, layout_matrix = res$layout_matrix)